Today's language models have an encyclopedic understanding of the public world, but they don't know anything about your business. That is the paradox of modern AI. You have access to the most powerful tool of the decade, but it is unable to tell you what the vacation balance of your employees is or the technical specificity of your latest product. To transform theartificial intelligence as a real driver of growth, it is imperative to connect these models to your own data via the RAG, or Augmented generation by recovery.

What is RAG (Generation Augmented by Recovery)?

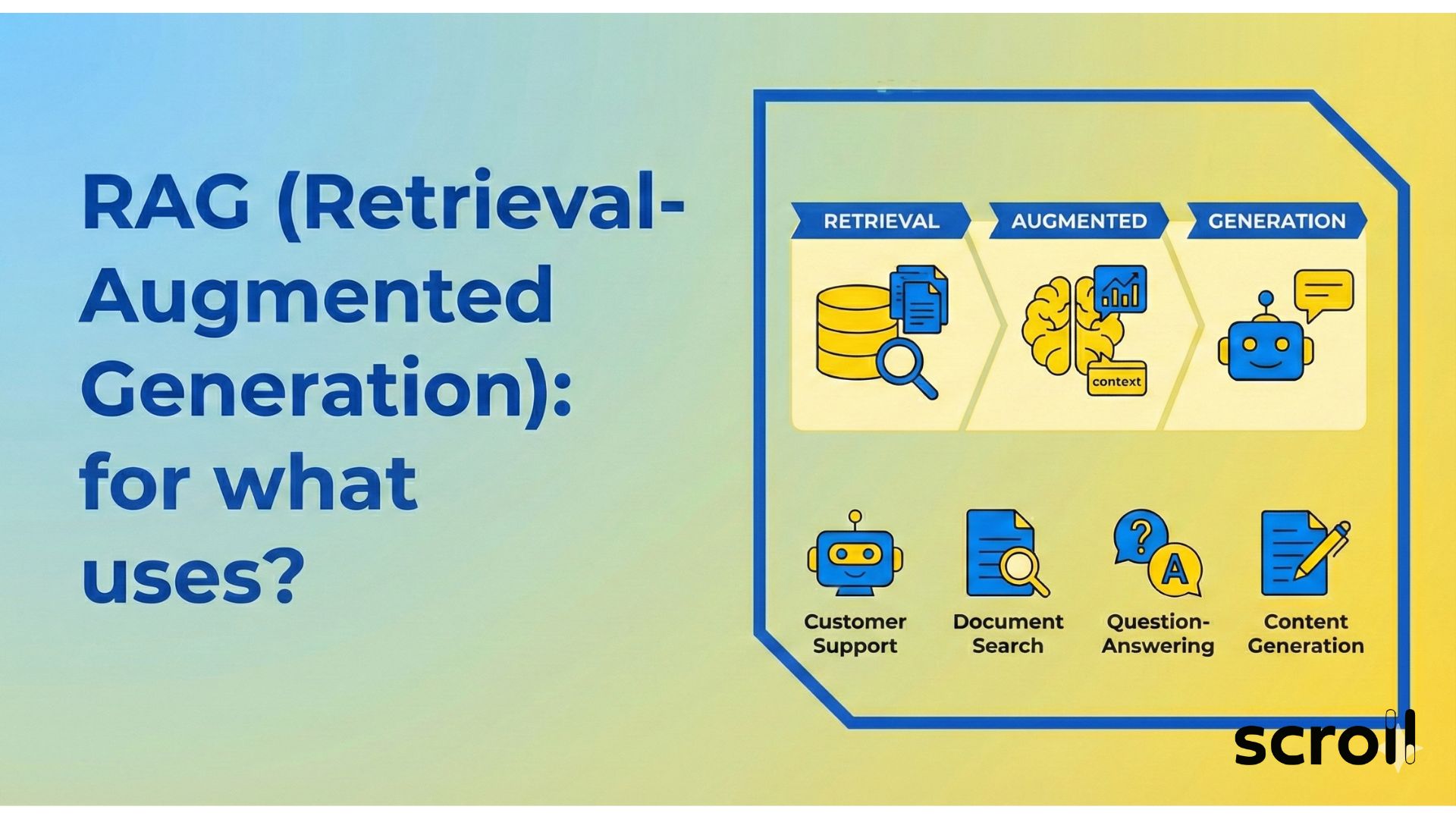

The Retrieval-Augmented Generation, often referred to by the acronym RAG, is a technical architecture that optimizes the results of a large language model (LLM) by giving it access to a external knowledge base before generating a response. It is the missing bridge between the linguistic power of AI and the operational reality of your business.

Imagine an open book exam. One LLM Standard, like GPT or Claude, takes the exam only with what he learned during his initial training. If he does not know the answer, he may invent facts in a convincing way to satisfy the demand. That's what we call a hallucination. With a RAG architecture, the AI has the right to look for information in a reliable reference manual, which corresponds to your data, before writing its copy.

This approach fills the two major shortcomings of natural language processing traditional. First, it solves the problem of data obsolescence because the model is not fixed in time. Second, it provides the missing private context. By connecting theGenerative AI To you external data, you transform a generalist tool into an expert in your business.

5 Business use cases where the RAG is essential

The adoption of RAG is not limited to simple technological innovation. It is a concrete response to business needs for productivity and reliability. Here are the areas where this technology excels and is transforming operations.

Smart Chatbots and Augmented Customer Support

Customer service is the historical playground for automation attempts, often with disappointing results in the past. The integration of intelligent chatbots powered by the RAG is radically changing the situation. Unlike traditional bots based on rigid decision trees that frustrate customers, an RAG assistant understands user intent and draws on technical documentation, ticket history, and product knowledge bases to formulate its response.

La Accuracy of answers is the key factor here. The system doesn't just redirect to a generic FAQ page. It summarizes the exact solution to the customer's problem in real time. This greatly improves theuser experience while relieving the support teams from level 1 requests. In addition, theoptimization of AI responses allows you to adapt the tone and the complexity of the response according to the profile of the interlocutor, whether he is a novice or a technical expert.

Exploitation of corporate documentation

The majority of a company's knowledge sleeps in inaccessible silos like PDFs, Intranets, Sharepoints, or Notion accounts. Traditional keyword research in these environments is often ineffective because it requires the collaborator to know the exact term in the document. The RAG allowsexploitation of internal data in natural language.

An employee can now ask a complex question such as “What is the procedure for validating expense reports for a trip outside the EU with a VIP client?” and get a synthesized response. This ability to dialogue with the corporate documentation Generates productivity gains massive. We are moving from a logic of searching for documents, which takes time, to a logic of searching for targeted and immediate information.

Decision Support and Legal Analysis

In regulated industries such as law, finance, or insurance, the smallest mistake can be costly. La reliability of the answers is non-negotiable. The use of the RAG ensures a factual anchoring loud. When a lawyer queries a contract database via an RAG architecture, the model generates its response by explicitly quoting the clauses and articles on which it relies.

This traceability allows rapid human verification and establishes the necessary trust in AI hybrid systems. The tool does not replace the expert; it increases their analysis capabilities by processing volumes of data that a human could not absorb in a reasonable amount of time. He is a tireless research assistant who pre-mashes the analytical work.

Employee Onboarding and Training

Onboarding new talent is a time-consuming process for managers and HR. A virtual assistant based on the RAG can serve as a 24-hour mentor for new hires. It answers questions about corporate culture, internal processes or the use of business tools based on reliable sources of information validated by HR.

This ensures that the information transmitted is always up to date, unlike static welcome booklets that become obsolete as soon as they are printed. La knowledge update is done simply by adding the new internal policy documents into the vector database, without requiring complex retraining or extensive technical intervention.

Augmented search engines for e-commerce

Les augmented search engines represent the future of browsing complex e-commerce sites or large B2B catalogs. Instead of filtering by categories, the user can express a functional need: “I am looking for a storage solution compatible with my Model X server that optimizes energy consumption.”

The system uses the semantic search to understand the technical characteristics and compatibility of products, thus offering relevant recommendations that go beyond simple keyword matching. It is a direct application of vectorization of data to improve conversion and guide the customer to the right product without friction.

Why choose the RAG architecture over Fine Tuning?

This is a recurring question among technical decision-makers: should you retrain a model (Fine-Tuning) or use the RAG? If the comparison RAG vs LLM Finetuné deserves nuance, the RAG wins in the vast majority of business applications for pragmatic and economic reasons.

The first reason is financial. Les LLM training costs to teach them new knowledge is prohibitive and must be repeated with each significant data change. With the RAG, thereal-time data integration is possible. All you have to do is index the new document so that it is immediately “known” by the system. This offers agility that Fine Tuning cannot match.

The second reason is truthfulness. Fine Tuning is effective in teaching the model a specific style, business vocabulary, or response format, but it is still subject to factual hallucinations. The model “thinks” it knows, but may be wrong. The RAG excels in reduction in hallucinations because the model is forced to respond only from the context provided by recovery systems. If the information is not in the retrieved documents, the model is programmed to say “I don't know,” rather than inventing a plausible but false answer.

Finally, the comparison RAG vs generative AI standard highlights transparency. The RAG makes it possible to source each sentence generated, offering an auditability that is impossible with a “black box” model that simply memorized information during its training. You know exactly why AI came up with this answer.

Technical operation: From raw data to the generated response

To understand the power of the RAG, you have to lift the hood and observe the flow of data. This process transforms inert documentation into active intelligence via several critical steps that require expertise in data engineering.

Data Ingestion and Preprocessing

It all starts with collecting documents from a variety of sources. The data preprocessing is a step that is often underestimated but vital for final performance. It's about cleaning the text, removing noise (headers, useless footers, HTML tags) and cutting information into digestible pieces called “chunks.” The quality of this division directly influences the relevance of future results. If segments are too short, you lose the context needed to understand them. If they are too long, accurate information is drowned out in noise.

Vectorization and Vector Databases

Once the text is cut, it goes through a step of vectorization of data (or embedding). Specialized models transform these pieces of text into mathematical vectors, that is, into long sequences of numbers that represent the semantic meaning of the content in a multidimensional space. These vectors are stored in vector databases like Pinecone, Weaviate, Qdrant, or Milvus. These databases are specifically designed to perform ultra-fast similarity searches, much more efficient than traditional SQL databases for this type of task.

The Retrieval Mechanism

When the user asks a question, it is also vectorized in real time by the same embedding model. The system then performs a semantic search in the vector base to identify the text “chunks” whose vectors are mathematically the closest to the question. This is where the magic of retrieving information operates: the system is not looking for the same keywords, but for the same meaning or the same intention.

Augmented Generation

The most relevant text fragments are extracted and sent to the LLM (like GPT or Claude) at the same time as the user's question, in a structured instruction called a “prompt”. The language model then acts as an intelligent synthesis processor: it reads the information provided in context and writes a fluid, coherent, and reasoned response. It is this combination that defines theoptimization of AI responses by the RAG.

Security challenges and current limitations

While the RAG is powerful, its implementation requires absolute rigor, especially on the data security. One of the classic pitfalls is the management of access rights. If your vector database contains all company documents without distinction, an intern should not be able to ask the chatbot “How much does the CEO earn?” and get the answer extracted from a confidential payslip that would have been indexed by mistake.

Rights management at the level of retrieving information is therefore critical to ensure the RGPD compliance and industrial confidentiality. The system should be able to filter the documents accessible by the user before even sending them to the LLM for generation. This involves synchronizing the security metadata of your original documents with the vector database.

Another major challenge lies in the quality of the source data. The “Garbage In, Garbage Out” principle fully applies here. If your corporate documentation is contradictory, outdated, or poorly structured, the RAG will produce inconsistent responses regardless of the strength of the underlying model. A data governance strategy is often the essential prerequisite. The success of an RAG project often depends 80% on data engineering and 20% on AI itself.

Transform your data into active intelligence

The Retrieval Augmented Generation is no longer an experimental technology reserved for research laboratories. It is the industry standard for deploying useful, reliable, and secure generative AIs in business. It makes it possible to valorize your data assets by making them accessible and usable instantly by all of your employees.

At Scroll, we don't just implement isolated technical building blocks. We design the complete architecture, from the initial audit of your data to the production of AI hybrid systems sturdy and secure. If you want to transform your inert documentary databases into real performance levers, we can define your first scope of application together.

Do you want a concrete demonstration of what the RAG can do with your own data? Contact the Scroll team to schedule a preliminary audit and uncover the hidden potential of your information.

Faq

The RAG (or Augmented generation by recovery) is an architecture that combines the power of a LLM (like GPT) with a external knowledge base. Unlike a classic AI that is based solely on its training, the RAG will look for up-to-date information in your internal data before formulating a response, thus ensuring relevance and context.

Fine Tuning consists of retraining a model to teach it new knowledge, which involves LLM training costs high levels and rigidity in the face of change. The RAG, on the other hand, connects the model to a dynamic database. It is ideal for knowledge update frequent and forfactual anchoring, because it does not require retraining to assimilate new information.

Language models can invent facts when they lack information. THERAG architecture drastically reduces this risk by requiring the AI to use only reliable sources of information retrieved during the search. If the information is not found in the vector database, the system is configured to indicate that it does not know the answer, thus ensuring reliability of the answers maximum.

Yes, if the architecture is well designed. Unlike public models where your data can be used for training, a private RAG system allows you to maintain total control. You can manage access rights at the retrieving information (a user only sees what he is allowed to see) and ensure the RGPD compliance by hosting data on secure servers or via private instances.

The RAG is extremely flexible thanks to the vectorization of data. It allows theexploitation of internal data in almost any textual form: technical PDFs, legal contracts, Intranet pages, support tickets, emails or SQL databases. Once processed by recovery systems, this unstructured data becomes a source of knowledge that can be searched in natural language.

.svg)

.jpg)

.svg)

.svg)

.svg)